While multiple formal investigations against the Trump family and administration continue to unfold, and Drumpf supporters weirdly deny the probable cause for concern, Putin’s troll army continues to operate out in the open on Twitter, Facebook, Medium, and other social media networks. The sheer scale of this operation started to become clear to me in the months leading up to Election 2016, having both spent a lot of time on social media both professionally and personally for over a decade as well as a hefty amount of time on political investigation during this presidential cycle: bots on Twitter had taken over.

Whatever your thoughts on the #RussiaGate corruption scandal may be, it should concern any citizen that an enormous group of bad actors is working together to infiltrate American social media, with a specific intent to sway politics. Media literacy is one part of the answer, but we’re going to need new tools to help us identify accounts that are only present in bad faith to political discourse: they are not who they claim to be, and their real goals are kept carefully opaque.

Cold War 2.0

We should consider our nation embroiled in a large international game of psychological warfare, or PsyOps as it is referred to in intelligence circles. The goal is to sow disinformation as widely as possible, such that it becomes very difficult to discern what separates truth from propaganda. A secondary goal is to sow dissent among the citizenry, particularly to rile up the extremist factions within America’s two dominant political parties in an attempt to pull the political sphere apart from the center.

We didn’t really need much help in that department as it is, with deep partisan fault lines having been open as gaping wounds on the American political landscape for some decades now — so the dramatically escalated troll army operation has acted as an intense catalyst for further igniting the power kegs being stored up between conservatives and progressives in this country.

Luckily there are some ways to help defray the opposition’s ability to distract and spread disinfo by identifying the signatures given off by suspicious accounts. I’ve developed a few ways to evaluate whether a given account may be a participant in paid propaganda, or at least is likely to be misrepresenting who they say they are, and what their agenda is.

Sometimes it’s fun to get embroiled in a heated “tweetoff,” but I’ve noticed how easy it is to feel “triggered” by something someone says online and how the opposition is effectively “hacking” that tendency to drag well-meaning people into pointless back-and-forths designed not to defend a point of view, but simply to waste an activist’s time, demoralize them, and occupy the focus — a focus that could be better spent elsewhere on Real Politics with real citizens who in some way care about their country and their lives.

Bots on Twitter have “Tells”

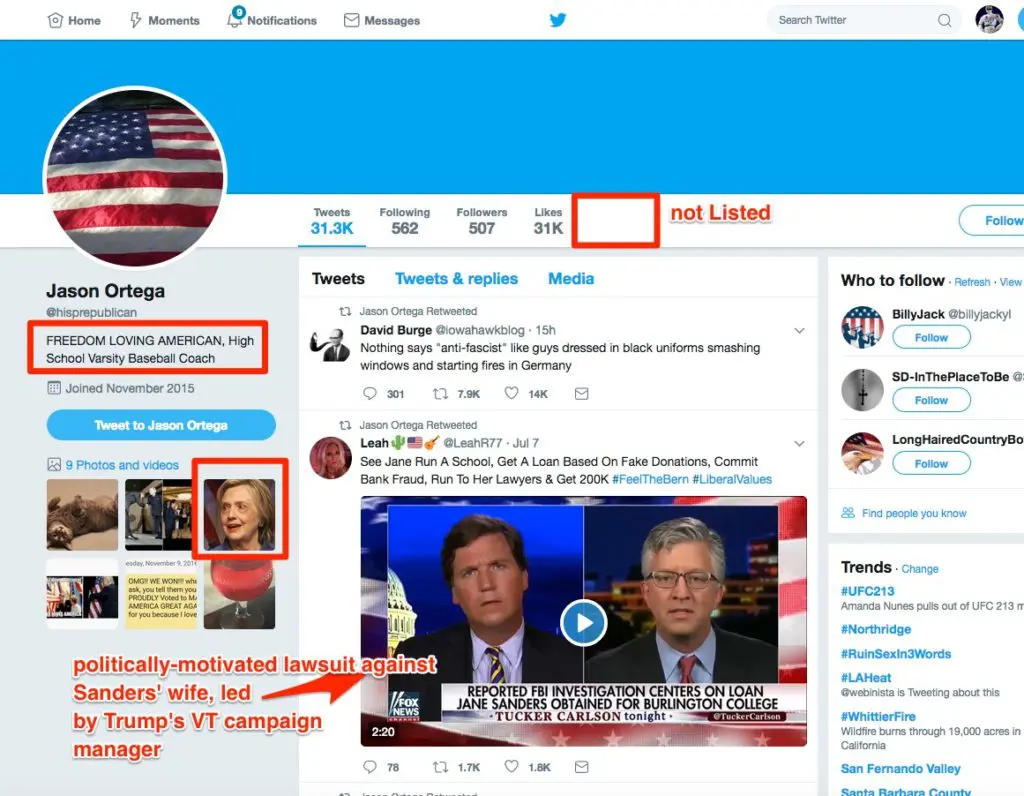

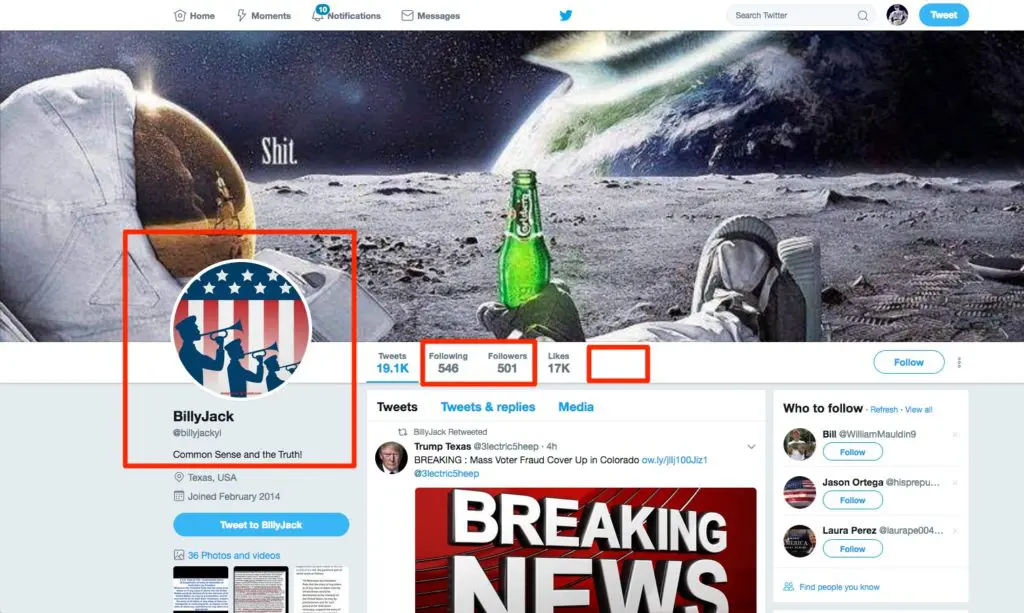

1) Hyper-patriotism

– Conspicuously hyper-patriotic bio (and often, name) – Posts predominantly anti-Democrat, anti-liberal/libtard, anti-Clinton, anti-Sanders, anti-antifa etc. memes:

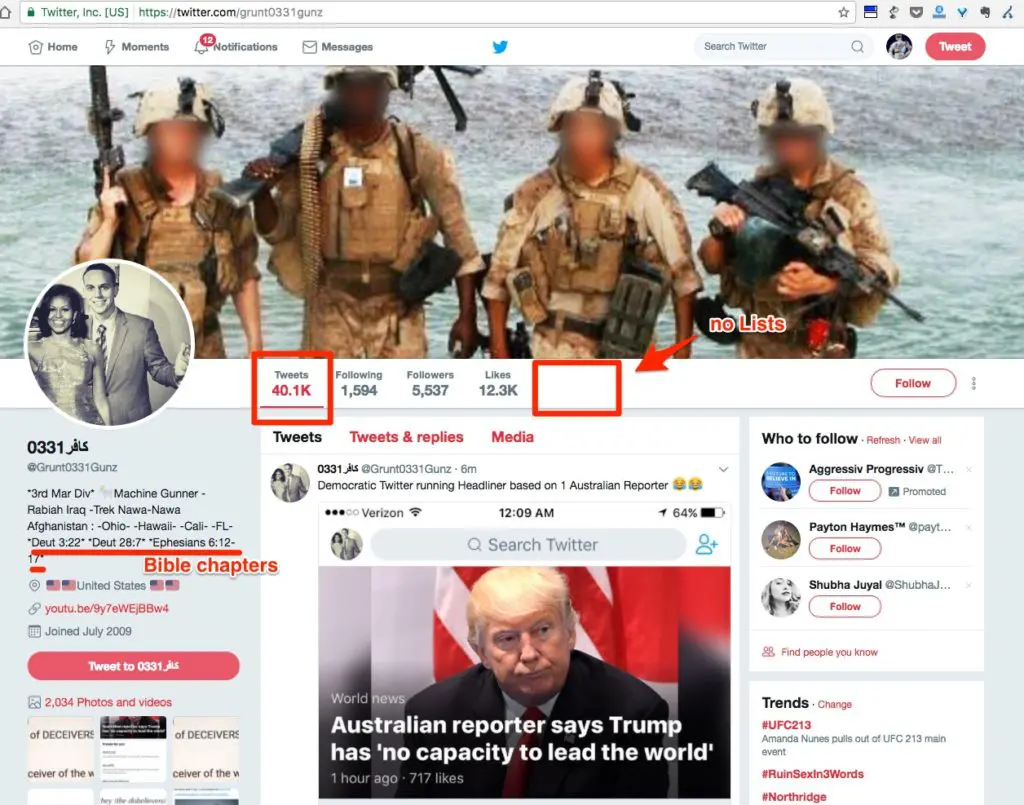

2) Hyper-Christianity

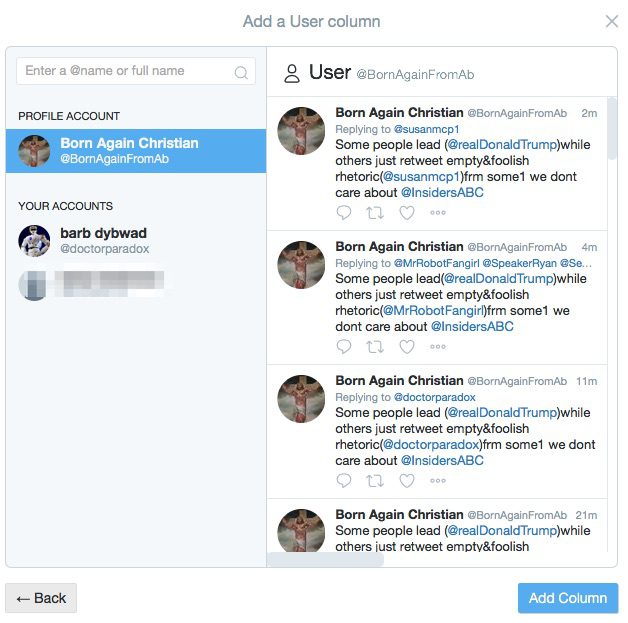

– Conspicuously hyper-Christian in bio and/or name of bots on Twitter:

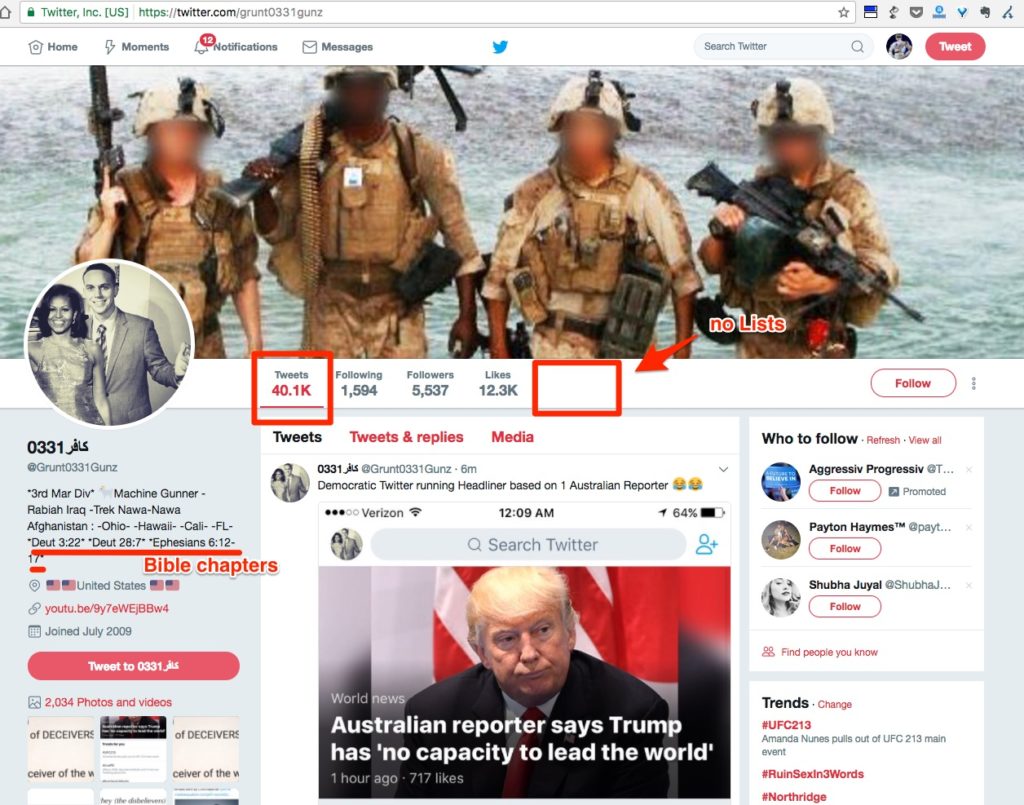

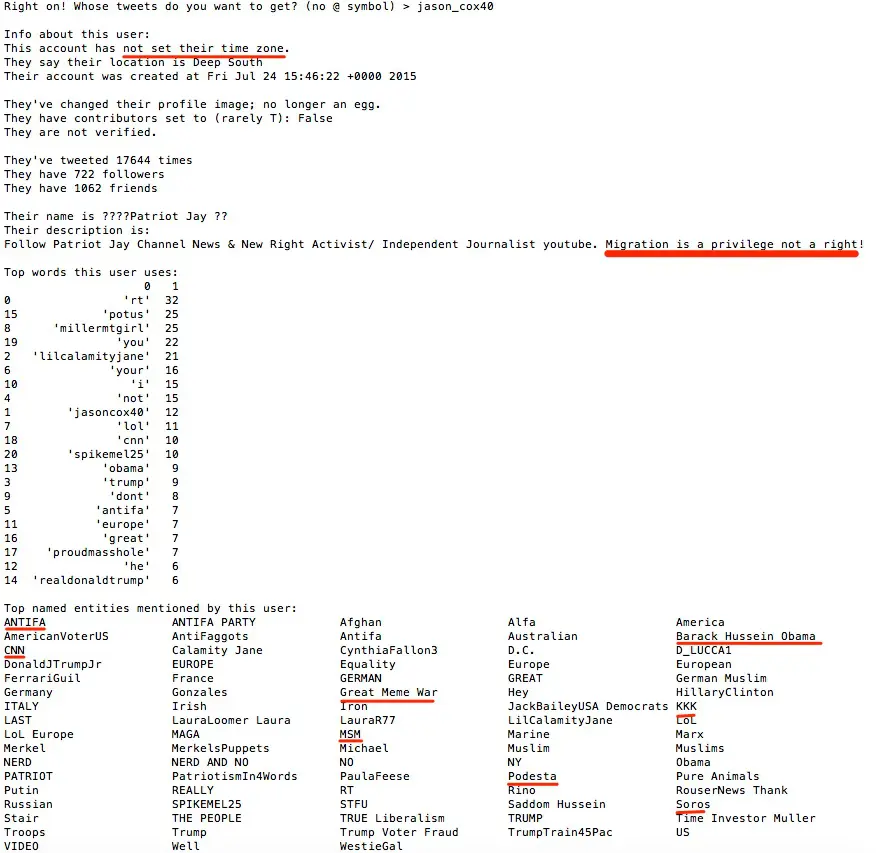

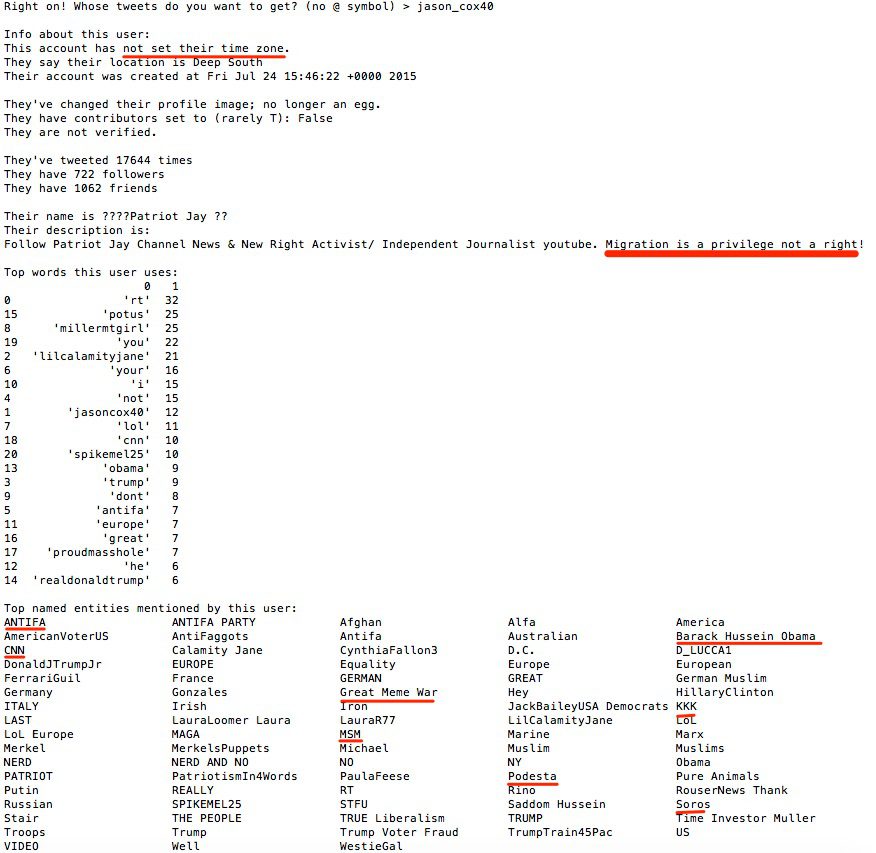

3) Abnormally high tweet volume

Seems to tweet &/or RT constantly without breaks — supporting evidence of use of a scheduler tool at minimum, and displaying obviously automated responses from some accounts. The above account, for example, started less than 2 years ago, has tweeted 15,000 more times than I have in over 10 years of frequent use (28K). Most normal people don’t schedule their tweets — but marketers and PR people do.

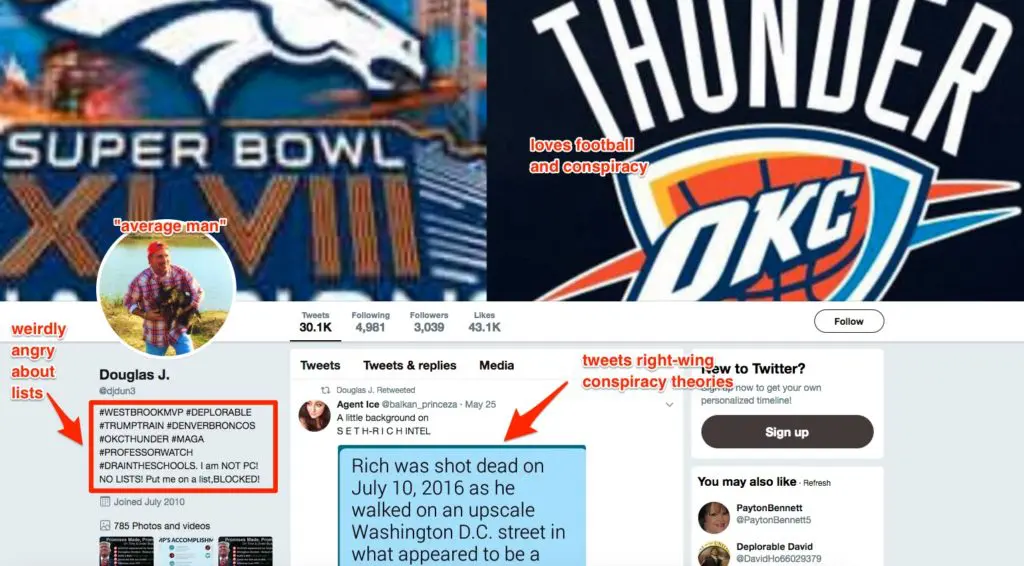

4) Posts only about politics and one other thing (usually a sport)

– Posts exclusively about politics and potentially one other primary “normie” topic, which is often a sport – May proclaim to be staunchly not “politically correct”:

5) Hates Twitter Lists

– Bots on Twitter have a strange aversion to being added to Lists, or making Lists of their own:

6) Overuse of hashtags

– Uses hashtags more than normal, non-marketing people usually do:

7) Pushes a one-dimensional message

– Seems ultimately too one-dimensional and predictable to reflect a real personality, and/or too vaguely similar to the formula:

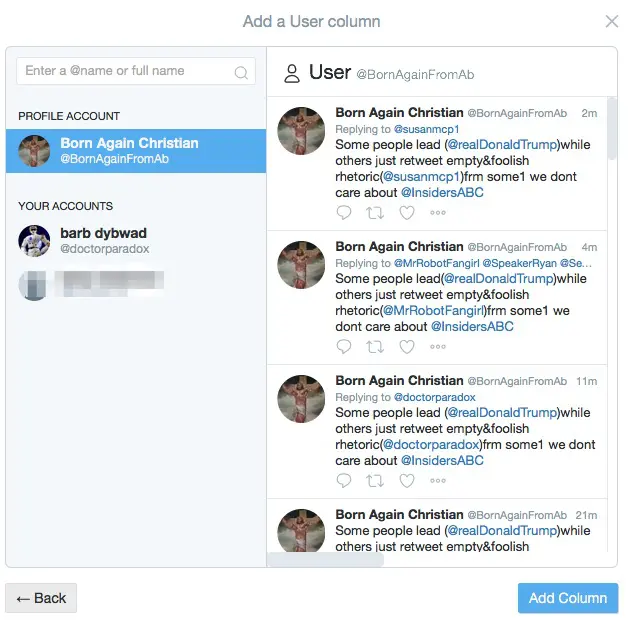

8) Redundant tweets

– Most obviously of all, it retweets the same thing over and over again:

9) Rehashes a familiar set of memes

– Tweets predominantly about a predictable set of memes:

Mismatched location and time zone is another “tell” — and although you can’t get the second piece of data from the public profile, it is available from the Twitter API. If you know Python and/or feel adventurous, I’m sharing an earlier version of the above tool on Github (and need to get around to pushing the latest version…) — and if you know of any other “tells” please share by commenting or tweeting at me. Next bits I want to work on include:

- Examining follower & followed networks against a matchlist of usual suspect accounts

- Looking at percentage of Cyrillic characters in use

- Graphing tweet volume over time to identify “bot” and “cyborg” periods

- Looking at “burst velocity” of opposition tweets as bot networks are engaged to boost messages

- Digging deeper into the overlap between the far-right and far-left as similar memes are implanted and travel through both “sides” of the networks

Comments are closed.